Alex Saroyan on Network Automation Nerds Podcast

Netris CEO and Co-Founder, Alex Saroyan speaks about his network engineering journey, automation opportunities, and founding Netris from the ground up with host Eric Chou of the Network Automation Nerds podcast. Gain insight on how Alex discovered an unmet need in the industry and the steps it took to devise a product and solution with Netris. Watch the Network Automation Nerds podcast interview with Alex Saroyan below.

About Host Eric Chou of Network Automations Podcast

Eric Chou is a seasoned technologist with over 20 years of experience who has worked on some of the largest networks in the industry at Amazon, Azure, and other Fortune 500 companies. Eric is passionate about network automation, Python, and helping companies build better security postures. Eric shares his deep interest in technology through his books, classes, podcast, and YouTube channel. You can connect with Eric on LinkedIn or follow him on Twitter!

Introduction

Eric Chou (00:00:00): Network Automation Nerds Podcast. Hello, and welcome to Network Automation Nerds Podcast, a podcast about network automation, network engineering, Python, and other interesting technology topics. I’m your host, Eric Chou. Today on the show, we’ll be talking to Alex Saroyan, CEO, and co-founder of Netris.ai. Prior to co-founding Netris, Alex held roles at Ucom, Orange Telecom, Lycos Europe, and many other places. We’ll be talking to Alex about his journey in founding multiple companies. All the cool projects that he has worked on, and the products and features of his current company, Netris. Alex, welcome to the show.

Alex Saroyan (00:00:46): Hi, Eric. Thanks for having me. I’m very excited to be here.

Eric Chou (00:00:53): Yeah. It’s great to have you on the show because I think what you are working on and your projects within Netris is so relevant to the audience for the show. I think it’s almost like, “Hey, why did I wait too long?” Mainly because I didn’t really know of its existence until I hear you talk on art of network engineering. I’m sure some of our audience have heard you about your background story at art of network engineering, but for those people who haven’t, can you just tell us a little bit about yourself and how you get into technology, how you get into network engineering?

Alex Saroyan (00:01:36): Sure. I’ve loved tech since my childhood. I love computers, and I’m grateful that I got my first computer when I was in maybe [In the] fith, or sixth grade. I was going to this place where they were teaching kids to program. Back then, you had to program to be able to play something. That’s how I got into computers. During school when I was in high school, I was already very much into Linux and networking. Right after high school, I started building my career in network engineering. Just like many network engineers, I started just by learning Cisco commands, typing in commands into Cisco CLI.

Alex Saroyan (00:02:43): I knew how to program, and I’ve improved my programming skills over time just to start automating my regular network engineering things. Over time, I’ve embraced software-defined networking and APIs, and tried to do things with those constructs. But moving forward, I’ve seen how the public cloud is defining a new standard user experience for DevOps engineers. I always thought about DevOps engineers, and application infrastructure engineers as my customers, because what I was doing as a network engineer, I was managing this team of network engineers, and other teams were asking us to provide them with network resources, to create a VLAN, to provision something in a rack.

Alex Saroyan (00:03:53): Those people, those application and infrastructure engineers, they were our customers. But when I have seen how the public cloud is servicing some of the same customers, I was like, “Geez.”

Eric Chou (00:04:07): You have competitions.

Alex Saroyan (00:04:10): This is how it’s supposed to be. That’s how the idea of starting Netris was born.

Eric Chou (00:04:20): Right. That was interesting when you mentioned you started out programming and you had your first Linux experience back in, I don’t know, you said fifth grade, right? I think that’s interesting you mentioned it because a lot of times we hear the two extremes of how people get into technology. One is they start really, really young. They program their first game when they were five years old, and sold the game know when they’re, before they graduate elementary school. Then, we have another spectrum where people didn’t really touch or didn’t even really know networking existed.

Eric Chou (00:05:00): They thought networking was just something that always worked, internet has always been there, and then they worked backward. They enjoyed the fruit of social networks and then came back to networking. You were in the first camp where you started young, and you knew this is what you wanted to do. With that background, you went into networking. That was interesting, but because of that background, I’m always curious about people who went down that path; how did you feel when you first looked at this domain-specific language that’s called Cisco iOS?

Embracing of Linux

Eric Chou (00:05:42): Did you think, “Oh, my God, this is so restrictive? I could do many more things if it just gives me APIs or SDKs.” What were your thoughts when you were first beginning to learn networking and you were restrained into the iOS environment?

Alex Saroyan (00:06:00): Oh, that’s a great question. I happen to embrace these two worlds at the same time, Linux world and Cisco iOS world, and both worlds were amazing. Back then, 20 years ago, Linux was this world of infinite possibilities, where you can use anything, create anything, modify anything. Cisco iOS was this another world where everything networking is what was there, because of the performance issue. You would not be able to get the same performance in Linux, although you could do networking in Linux, but performance wise Linux networking was prohibited back then. I had these two worlds and over time each world was missing something. In the Linux world, I was missing this network performance and in the networking Cisco CLI world, I was missing this-

Eric Chou (00:07:22): Flexibility.

Alex Saroyan (00:07:24): Yeah, flexibility of doing anything, creating something. When I have embraced public cloud, when it evolved and I have embraced, I discovered this third world, which was a world of infinite flexibility and immediate provisioning, and self-service. Then, open networking started to show up with the Cumulus Linux. They were one of the open networking operating systems.

Eric Chou (00:08:09): Absolutely.

Alex Saroyan (00:08:10): Yeah, I started to think about Cumulus Linux that this is Linux for networking devices, for switches. Basically, this will enable creative people, developers, and developers that are into networking, to develop and create new functions, and new features on the switch, which wasn’t possible in the Cisco world. That was another signal for us that time is now because we knew, “Okay, public cloud is the example of an experience that needs to be everywhere, not only in public cloud but also on premises.”

Alex Saroyan (00:09:03): The possibility to run Linux on the switches, enables people to develop programs that are running literally on the switch. Also, regular Linux, it also evolved and we have seen rays of SmartNIC cards, DPDK acceleration, eBPF. Those technologies, again, they were bringing performance closer to the Linux and flexibility of development closer to networking hardware, and the need described by the public cloud. These three things were like, “Hey, Alex, you need to do something.”

Eric Chou (00:09:54): Alex smells the opportunity. Yeah. No, I totally get what you’re saying because you had previously … It wasn’t even Linux, right? It was like BSDs and you have your AT&Ts and your Sun Solaris who were banking on this world of … they weren’t even open-sourced. I don’t think they were open-source. Linux was this newer kid on the block and you could do anything. You have all the look and feel of BSD and Unix, but you have the flexibility of open source and the good new tools and all of that. However, the problem, yeah, you mentioned before, was you could stick multiple NIC cards on the Linux and make it a defacto router, but you would never get that FPGA performance.

Eric Chou (00:10:40): You can never program that FIB that would route at the packet as fast as you could. On the other hand, you have the Cisco device where you’re throwing all the vendors you want, Juniper or Cisco, whatever. Then, they could route the packets really, really fast. But then on the management front, you’re in their wall garden. Juniper is the BSE on backhand, but never to open up that kernel to you. You can’t just install the, I don’t know, VIN onto the box.

Alex Saroyan (00:11:08): Exactly.

Eric Chou (00:11:10):

Yeah. Then, the public cloud came around. That was the third leg where you saw, and now there are so many things that you’re talking about I could identify with. You’re talking about Cumulus, having this natural bridge between Linux kernel. We had Dinesh on a few episodes ago and he talked about what Cumulus was doing, just making the shim, whatever you’re typing to Linux, your terminal, then they make this shim that translates into the lower level commands that they could execute directly on the box itself, whatever white label that they were using. Everything you talked about is I was just nodding my head. For now my head, even more, my chin is going to fall off.

Eric Chou (00:11:57):

It’s so interesting that you mentioned all this stuff that I was just like, “Yeah, that’s right.” But the difference was, the difference between you and me is you saw the opportunity, and you went ahead and found Netris, and I didn’t. I’m here talking to you. Tell me, Alex, you talked about these signals, but how do you go from signals into making this a reality?

What if it works?

Alex Saroyan (00:12:27): I’ve been already experimenting with a few things around. Back then, I was trying to do something, parse the console or analyze the console output and make network engineer’s life better. But when I noticed the signals, I decided to change the approach. Before that, I was trying to innovate on the existing stack. After seeing these signals, it was like this new idea struck my head. All of a sudden I thought this way, like, “Hey, we don’t really need to operate on principles and approaches of traditional networking.” The public cloud, it’s very much different. It’s very much prescriptive in so many cases.

Alex Saroyan (00:13:38): You cannot decide what protocols they should use under the hood. These types of things are being decided by the software rank under the hood of the cloud automatically. What is left for engineers to decide are things that we humans are good at designing the networks, deciding how we connect our different data centers, how many data centers to create, and really architectural things. I thought further, like, “Hey, our minds, the human mind is good for this type of architectural things for creative things, but everything that is repetitive, and it’s not really rocket science, can be handled by software.”

Alex Saroyan (00:14:35): If it is handled by software if the entire configuration is generated by software, a software following human-defined requirements and policies. But what if we take away that human typing command component from the whole process? We’re definitely going to benefit from a human error perspective. We’re going to improve the consistency because, looking into our traditional network engineering history, and we know that most of the failures are related to inconsistencies. I configure something, my colleague configures something else, a little bit different, and I come back and manage his configuration.

Alex Saroyan (00:15:30): I screw things up and the network blows up. We can’t afford that when we are providing a self-service situation. We want to give DevOps engineers the opportunity to self-service, but we want to do this in a safe way. We can’t let them break the network. An important component in that whole thing was software needed to configure everything. My co-founder and I, did a couple of experiments. We picked a couple of basic use cases that every data center is doing. We created a prototype and tried to find the first adopter. We thought, if this is a thing, we should be able to find at least one company who will deploy this, who will give this a shot.

Alex Saroyan (00:16:41): We found the first two adopters, and they agreed to try our prototype. We’ve learned a lot, and we’ve seen a lot of issues in our first versions of the algorithm, what we have seen; what we have noticed is that it is not impossible. Basically, by listening to their feedback, we’ve improved the core part of the algorithm. It was proof for us that the idea can work. That was internal validation, and we decided to start building on that.

Eric Chou (00:17:25): Oh, that’s cool. That’s cool. You actually went ahead and did a minimal viable product, of course. Then, you validated that idea with two customers. Were they paying customers or were they just graciously agreeing to test that out because they like you so much?

Alex Saroyan (00:17:50): Well, having prior relations with these early adopter customers definitely helped a lot. I’m grateful that they trusted us, a team of few people experimenting with something. The agreement was this: They would try it and if it works and it gets to the point where it’s something that they can trust and start moving their commercial customers to that new technology. At some point, they will start to pay. We are grateful that during this experimental time they let us do all the experiments and they handled all the pain that came with all these experiments. But eventually, we went into production with both customers, and eventually, they became our first paying customers.

What is the problem that Netris is trying to solve?

Eric Chou (00:19:06): Nice. Very cool. Of course, they have to trust you. They have to have some kind of confidence, and you guys jointly take that risk. It’s a risk on your part, and risk on their part as well. But they could see the potential of, what if this works? Then, down the line, they could see the potential. It was definitely a calculated risk and I’m glad it worked out for you because a lot of people, I think there’s a lot of people trying out the MVPs in different ways. Maybe they’re more academic, so they try it out in a school network, like what OpenFlow did.

Eric Chou (00:19:44): They tried it out at Stanford, and just in a less pressured environment, and some of them tried it out internally. I think there were a few projects where, I don’t know, internally Google, for example, the Kubernetes, they did it for themselves, they tried it out and validated it internally. Because the company was big enough that they were able to get enough experience, even before they released the beta, they were very well versed in the different use cases and breakage scenarios. This would be one traditional way, I would say.

Eric Chou (00:20:21): You put out the product, you find one or two personal relationship customers and you try it out with them. That’s a good segue into my next question, is can you tell us what is the problem? I think you dive into a little bit where you say; you look at these other developers as your internal customer. You’re trying to service them because your competitor if you don’t service them well, your competitor, which is Amazon AWS, and Azure, they could just go away and put in their credit card and launch a few VMs and VPCs right. Can you tell us what the problem Netris is trying to solve?

Alex Saroyan (00:21:07): You can tell that the public cloud is successful and growing pretty well.

Eric Chou (00:21:13): Yeah, I could tell. I was with Amazon at that time. I was dying. We were dying and trying to keep out with the demand. We couldn’t build data centers fast enough.

Alex Saroyan (00:21:22): Yeah, so there’s a reason for that.

Eric Chou (00:21:26): Yes.

Alex Saroyan (00:21:27): It’s convenient. For most of the businesses, they don’t really need to be … they don’t really need to manage the infrastructure. Before the public cloud, a lot of businesses were forced to manage the infrastructure because there was no other way. With the introduction of the public cloud, there was a new concept. You still can run your applications, but you don’t need to manage your infrastructure. You don’t need to think about power cooling and network configuration and server installations.

Alex Saroyan (00:22:16): You only need to focus on things that are specific to your business, and that’s logical. That’s how it should be. That’s why the public cloud started to grow. A lot of applications and a lot of teams are designed with the public cloud in mind, cloud-native applications, cloud-native teams if you will. Developers using public cloud tools, are using Terraform to provision their infrastructure resources. For some businesses, they cannot be in the public cloud only. They need to be hybrid.

Alex Saroyan (00:23:07): They need to have something in the public cloud and they need to be something on-premises. Now, it is hard to apply two different methodologies for your two parts of the infrastructure. The on-premises infrastructure should be no different from the public cloud because we all have seen that the public cloud is this convenient experience. Now, when DevOps engineers, use multiple public clouds, they still have a similar experience. Amazon and the Google cloud are two different providers but very similar experiences.

Alex Saroyan (00:23:50): They can deploy the same applications in both places. They can use Terraform with a very similar construct. But when they bring in into the game their third type of environment, their on-premises environment, it is so much different.

Eric Chou (00:24:14): We will make a judgment on better or worse. The answer, obviously, in our conversation, it’s saying it’s not as convenient. It’s definitely not as fast, but I like to hear the rest of what you want to say about that story.

Alex Saroyan (00:24:34): I don’t want to even call better and worse, because, for a lot of use cases, traditional networking is doing really a good job. There’s nothing wrong with traditional networking. It’s great. It’s okay for its use cases. We specifically focus on these other use cases that are related to cloud-native DevOps engineers. Now, in the public cloud, for these DevOps engineers, it’s a normal situation for them to go into the console, create a new VPC, VPC networking, and throw a bunch of virtual machines into that VPC and run something.

Alex Saroyan (00:25:24): That can be an experiment, that can be a production. That can be a small production that they will scale in the future. But everything starts with VPC networking, and the creation of VPC takes just seconds. VPC networking, what is VPC? It’s this little window that says VPC name, VPC subnet. Do you need NAT? Yes, no, and create or cancel. This is what networking is from a DevOps engineer’s perspective in the public cloud. Imagine they work this way for a few years, and then their business people say that there are business reasons that they need to also deploy this new on-premises location somewhere.

Alex Saroyan (00:26:15): Not in the public cloud but somewhere else on-premises. They go to on-premises thinking VPC and Terraform, but what they’re going to face? They’re going to face a ticketing system, email, and font.

Eric Chou (00:26:31): They’re going to face with VLANs or whatever overlay that you use.

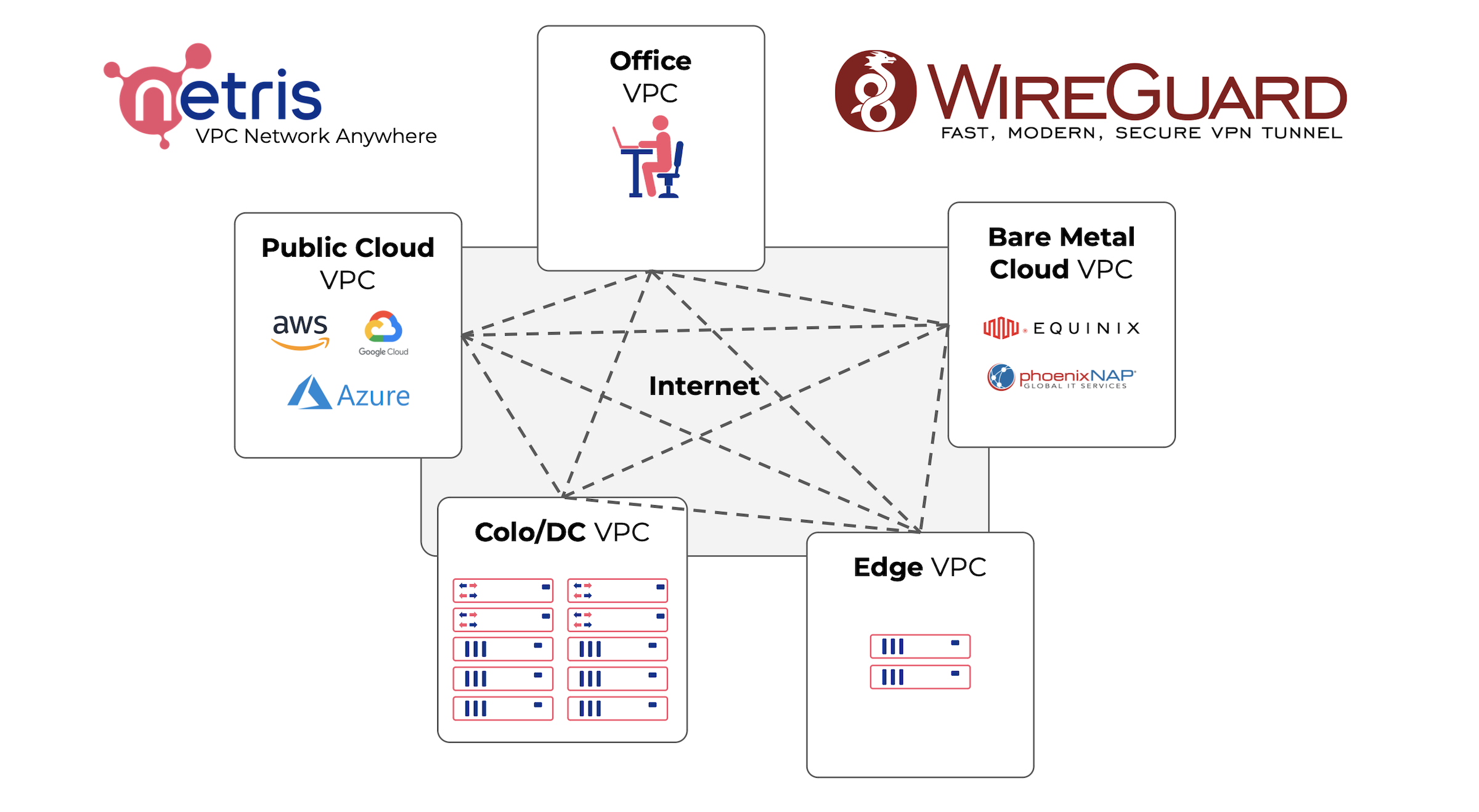

Alex Saroyan (00:26:38): Yeah. They’re going to appear in a whole different world where they don’t even speak the same language. What we do we have created Netris. Netris is software that enables network engineers to create a network that provides DevOps engineers the ability to self-service. Just like in the public cloud, where network engineers are able to mark resources, say, these bunch of resources, we let these DevOps engineers manage these bunch of resources. Then, DevOps engineers can easily go to Netris console, web console, or they can use Terraform, whatever they like to use. Very similarly to the public cloud, create a VPC-like construct and get these constructs provisioned and ready to use immediately.

Eric Chou (00:27:45): Yeah. Would you say Netris provide this abstraction level to make the networking more declarative rather than procedural? By declarative, we’re saying, “Hey, do you want BGP as opposed to … ” True or false, right? Yes or no. You want BGP versus the procedural, say it’s a Cisco box, it’s a Nexus OS, so you need to config router BGP, ASN, whatever. I think that’s what you’re saying, right? Netris provides this abstraction layer that have with on-premise networking with the same experience as in the cloud, like public cloud networking.

Alex Saroyan (00:28:28): Yeah, exactly. Abstraction and declarative interface, are the words that we like to use. Because there’s no this one to one thing. Some traditional network vendors, what they do, they do one to one mapping of CLI commands into Terraform language. There is definitely a use case for this, but the cloud-native use case is not like that. You can’t do the one-to-one mapping. You want abstraction where you say, “I want NAT, network address translation, and I don’t care how it works.”

Alex Saroyan (00:29:20): It’s up to Netris to figure out, “Okay, on which devices do I configure NAT? How do I organize high availability of these NAT boxes?” The same applies to all other network services, all kinds of layer two, layer three, layer four, and PGP, all the things that are essential to make your private cloud work.

Eric Chou (00:29:46): Right. If I’m a DevOps engineer, I don’t really care about VLAN. I care about isolation. I care about, I don’t know, the separation of concerns between the server team versus the firewall team, but I don’t really care about the exact commands. I care about the product or the end results, and Netris provides that separation layer. You mentioned Netris is a software that you run and provide this abstraction layer. You talk to Netris instead of talking to the boxes directly. Is this software that runs, I assume, it’s on-prem because it runs in the VM, runs in the physical box? How does it run typically to manage your on-prem devices?

Alex Saroyan (00:30:37): Netris controller, not to confuse with SDN controller.

Eric Chou (00:30:43): No.

Alex Saroyan (00:30:44): Yeah.

Eric Chou (00:30:45): SDN movement has died. Haven’t you heard? No, I’m just kidding. I remember the SDN controller, but maybe like 90% of people who are listening don’t. But yeah, so Netris controller?

Alex Saroyan (00:30:58): Well, there are definitely use cases for SDN controllers and now there’s also intent-based networking and there are many, many good use cases for intent-based networking. I’m just saying we don’t do this. We do VPC-type networking, which is different. We may not have a lot of features that either SDN or IBM provide because those two technologies are created for different use cases. Now, answering the question about the Netris controller.

Eric Chou (00:31:38): Sure.

Alex Saroyan (00:31:42): Netris controller can run pretty much on any Linux machine. The installation process is very easy. You get one-liner, you just copy and paste it into almost any Linux machine. In a couple of minutes, you’ll get the Netris controller up and running. What will happen behind the scenes? That one-liner will install a tiny K3s cluster, just one node cluster, and will download containers because the Netris controller is containerized application.

Eric Chou (00:32:19): Got it.

Alex Saroyan (00:32:21): That way, it is easier for us to develop and deliver to our customers.

Everything starts with planning.

Eric Chou (00:32:26): For sure. Okay. So you have a one-liner that allows them to create a Kubernetes cluster with a node? Obviously, you have the pods and so on and so forth, but the controller … and then now, you get up and running. Do you provide a gooey for people to get in and start configuring and adding devices?

Alex Saroyan (00:32:55): Most of our customers are either building a greenfield data center or they are larger enterprises that already have something and they are building a little cloud native island. Building one or two racks of something new. Everything starts with planning. They go to the whiteboard and they draw, “This is how many servers I imagine to have. This is how I’m going to connect my upstreams. Those are public IP addresses that we’ve got.” Based on the drawing, they go and order hardware. Order hardware, order a cooling power, and Rackspace.

Alex Saroyan (00:33:45): Then, before even they rack and stack hardware, the first step is to get Netris controller ready. For that, our customers usually use one unit server. They rack and stack that one-unit server. They connect a management internet connection right into a controller, and outbound internet connection connects directly into a Netris controller. Then, they install the Netris controller with the one-liner and it provides access to a blank controller. Then, the network engineer role goes into the Netris controller and remember that whiteboard drawing and that list of IP addresses, now we need to translate that from the whiteboard and spreadsheets into Netris.

Eric Chou (00:34:37): Got it.

Alex Saroyan (00:34:39): We go to an entry site [inaudible 00:34:41] and we say, “Here’s our public IP addresses. Here’s our private IP addresses.” Then, we have this topology manager where you point and click to define all the devices. This is my switch one. This is the name of switch one. Netris will assign an IP address and is numbered automatically, things like that. Then, you draw your topology. That’s one way of doing, through gooey. But we also support, we also have Terraform provider, that’s a validated Terraform provider with all the documentation in a Terraform registry.

Alex Saroyan (00:35:26): Network engineers also can benefit from using Terraform and they usually do, so they can use Terraform to describe that topology in a declarative way. It’s minimal information that goes to Terraform, like a list of the switches. You need to describe how you imagine connecting links, and which port connects to which port. You can even use Terraform loops to describe that because if you have 20 switches, the way you connect your leaf switches to spine switches is probably very repetitive.

Alex Saroyan (00:36:12): You can describe that in four lines in Terraform. You put that four lines, you do Terraform apply, and then you see this topology pops out in a Netris topology manager. When you get there, you just take that topology view. You go physically to the data center and then based on the diagram, you start racking and stacking your switches.

Eric Chou (00:36:41): Or, better yet, you pass it on to some data center port shops. You add a ticket and you say, “Hey, man when you have some free time, which is never, you go ahead and rack stack port one to port 16 on this guide and so on and so forth.” You mentioned two ways, but there somehow needs to correlate switch one. Switch one, you need to physically map that into this physical switch and rack one, top of rack this guy. How do you bootstrap that? Is this still some manual work, in the beginning, to configure management IP, a hostname, or whatever for them to be discovered, or is that bootstrap could be done by Netris as well?

Alex Saroyan (00:37:33): Right now, in the current release, we require to install the operating system and copy and paste a one-liner-

Eric Chou (00:37:44): Got it.

Alex Saroyan (00:37:44): …and Netris controller into the the switch. That one-liner has a unique ID insight. When you copy and paste, that’s how we identify which switch is that.

Eric Chou (00:37:56): Got it. Got it. No, go ahead.

Alex Saroyan (00:38:01): That’s the motion for today, but we are currently working on what we call zero-touch provisioning. Basically, you will be able to provide the Mac address and we will identify the switch based on the Mac address, and we will install the operating system and the Netris agent automatically.

Eric Chou (00:38:26): Nice. Nice. Yeah. No, the only reason I ask is because you mentioned spines and leaves and class network. I think one of the biggest thing that we used to try to figure out is how do we even abstract that. Zero-touch provisioning as you mentioned. We know the Mac address on the management interface and we know this inventory label that they help us. Maybe you could just scan it in and inventory label, then you know you do your DHCP server. You know the IP address, so those could be deterministic and then go on.

Eric Chou (00:39:03): Then, we could offload those labeling and inventory to the vendors, for example. Then, they could ship it to us in a container already, where inside of what I’m talking about, like big truck, big containers where there’s like 20 racks and then 10 racks of computer, 10 racks of database or whatever. That’s why I asked. It’s great that you’re moving toward that direction, in zero-touch provisioning. But those actually came in, I would say those really only applies to large enterprise and maybe cloud providers.

Eric Chou (00:39:38): Most people, they’re happy with just the one-liner. You’re already saving so much work. It’s like, “Oh, my God, I’m already 90% done that I don’t care that I have to copy and paste in the console for just this one thing 20 times.”

Alex Saroyan (00:39:53): Yeah, exactly. This is more of to give it really great experience. Oftentimes, the person who rack and stack and the person who can install the operating system are different people. We imagine that in the future we will have a mobile application for the data center engineer. The data center engineer doesn’t need to take a laptop. They take the mobile app and they will see that topology view in the mobile app. They will be able to tap on the switch, like, “Hey, I’m racking and stacking this switch in this rack.”

Alex Saroyan (00:40:50): They tap on it and then it will request access to the camera. Like you mentioned, there is this tag with a barcode that shows the Mac address, so the engineer will just scan that barcode, like in a supermarket. They scan the barcode and automatically, based on that barcode, we will identify the switch. That’s where we go, and that’s why we need that zero-touch provision.

Eric Chou (00:41:23): Oh, I’m so ready for that. It’s so cool. It would’ve been really cool, right? Because not a lot of people, not a lot of companies could exert that pressure or that demand power for your server provider. There are some people who could say, “Hey, just ship us 50 racks, all labeled and all cabled up.” Then, you just provide the patch panel on the side of that container, not Kubernetes containers, but actual physical containers.

Alex Saroyan (00:42:03): Physical containers.

Eric Chou (00:42:04): Yeah, a physical container where you just, “Okay, now, you just need 16 uplinks to these big switches on the core switches.” But then the rest of the 50 racks, it’s already cabled. Not many people could do that. I think that’s where a lot of times when I talk to my cloud provider friends, they live in a bubble. They’re like, “Oh, why don’t you just take it to a data center tech?” You’re like, “No, 90% of the world doesn’t do that.” That almost sounds like magic when you describe it. We know it’s very real and it just takes time and energy to code it up.

Eric Chou (00:42:42): But everything else, the text stack is already there for you to do. I’m really looking forward to that. If one day you could do that, I’ll be the first to retweet that video or whatnot. Like, “Man, this is what I should have in 2012 or whatever.”

Alex Saroyan (00:42:57): Yup, we’re moving closer to that.

Eric Chou (00:43:02): Nice, nice. I’m excited for it. Can you tell us, you mentioned it’s a Kubernetes container image that you pull down? But obviously, when I looked at your demonstration videos, it does show a front-end and obviously you need some gooey for people to click and drop. Can you share some of the tech technology that you use? No secret sauce, just whatever you could disclose on, “This is what we use as front-end. Maybe we use some web server and so on, database backend.”

Alex Saroyan (00:43:41): Sure. The backend, and controller backend is developed using Node.js. Front-end is a-

Eric Chou (00:43:57): No, just kidding. No, I’m a Python snob, right? I guess you could say that. But okay, Node.js it is.

Alex Saroyan (00:44:05): Yeah, that’s the backend and it is an API first type thing. The backend has all these APIs and front-end is using these APIs. But also our Terraform provider is using that APIs. Our Kubernetes operator is using the same APIs. That’s only the front-end, back-end controller part. There’s also part of Netris agent that this agent is a little piece of software sitting inside a switch. That is developed in Go, GoLang because we need something that is high performance. Because some switches, they have performant ASIC, but they have small CPU.

Alex Saroyan (00:45:07): We need to be cautious about CPU resources. Controller, besides the front-end backend, it also has this gRPC server that is for network switches and SoftGate. I will tell what SoftGate is soon. Network switches and SoftGate nodes to connect to the controller using gRPC. This part is written in Go.

Eric Chou (00:45:38): I see.

Alex Saroyan (00:45:40): SoftGate, I mentioned SoftGate, it’s a Linux machine with a SmartNIC card, with a DPDK accelerated software. SoftGate handles networking part that switches cannot do. Switches provide high-performance connectivity between your servers and other servers, between all your servers. But when you need connectivity with the outside world, when you need network address translation, you need layer four load balancing, you need site-to-site traffic encryption, like site-to-site VPN, and border routing functionality.

Alex Saroyan (00:46:28): Some of our customers, they plug directly into internet carriers or internet exchange points, and some of our customers, they use Cisco or Juniper routers as upstream because they already had that. But other customers are not using that. They can use Netris SoftGate and this DPDK accelerated Linux machine can forward a hundred gigs per second of traffic as a border router. The beauty of this solution is that you have the same machine for border routing, layer or for load balancing, for NAT, and site-to-site VPN.

Alex Saroyan (00:47:13): It’s not four different machines. It’s one machine providing all these functions and you can scale that machine horizontally. You can do HA. It’s very similar to how these big cloud providers do under their hood.

Eric Chou (00:47:32): But that a SoftGate machine is actually a physical machine that either you buy and ship to the customer because it does require DPDK and SmartNIC, or whatever that’s on board. Is that something that white label, I.e, the customer could say, “I’m going to buy this Netris SoftGate compliant box, and then I just install the software.” Or, is it a combo deal where it’s a SoftGate model one, two, and three that I just order from you?

Alex Saroyan (00:48:05): Good question. We are trying to be neutral. We do have system requirements because this is forwarding a hundred gigs per second in a one-unit server, that’s a big deal. The server needs to have 128 gigs of RAM. It needs to have two modern CPUs, and two actual sockets with a minimum of eight cores in each socket. It needs to have minimum of a hundred gigs per second SmartNIC. We have a few SmartNIC models that we have validated.

Alex Saroyan (00:48:51): Theoretically, we can support just any SmartNIC, but we like to validate things so we can tell our users, “Hey, this is validated. This definitely works.” Then, they buy just any servers, Dell, HP, Supermicro. SmartNICs, usually, they buy Nvidia SmartNICs because they are, I don’t know, popular in performance.

Eric Chou (00:49:22): They’re the first, right? They invented the term, what is it? DPU, data process unit, versus GPU and CPU.

Alex Saroyan (00:49:34): Yeah, that too.

Eric Chou (00:49:36): Nobody else could use that term now. Anyways, people do the same thing and just call it different.

Alex Saroyan (00:49:41): Yup. That’s the current situation. Besides, we’re planning to come up with a reference architecture with a couple of vendors. It will be a set of switches, SoftGate hardware and compute hardware. Users will have all these components. We only provide software. We’re not going into the hardware reselling business. No, we’re just a software company.

Eric Chou (00:50:24): Yeah. Yeah, for sure. That’s interesting. You’re purely a software play, even though you’re actually dependent on some hardware. Of course, you got to forward packets, but you do provide validation, and certification, and these NICs that you know work well. Not to say it won’t work with other NICs or DPDKs or whatever, but these are the ones that you validate it and you give blessings on.

Alex Saroyan (00:50:57): Yeah, because the way we think about why do we charge our customers, we like to describe in this way, that our customers are not paying us just for using Netris software. They are paying us because of the experience. As a company, we feel responsible for the whole experience. In order to be able to deliver on the experience that we wish we deliver, we need to validate all these things. We do a whole bunch of different layers of validation. We use some data that is coming from our users.

Alex Saroyan (00:51:52): We run thousands of testing in our environments, a lot of automated tests on different hardware, with different configurations. We run different failure scenarios. Like, this cable failed, that device failed. We need to do all these things to ensure that we can provide users with the right experience. After doing all of that, we feel legit charging for the experience.

Eric Chou (00:52:25): Nice. I like how you emphasize the experience. That reminds me of Apple. I’m not paying just for this laptop. I’m paying for the actual Apple experience, my iPhone experience. That’s why they charge 30% more than whoever else that’s out there. But no, I’m just kidding. I totally agree. I think you are paying for the end-to-end experience. I personally use Django, which is like a Python framework. I don’t pick it because there’s current features only, I pick it because I trust that whatever you roll out, Django 4.0, it’s going to work with all the packages.

Eric Chou (00:53:12): It’s going to work with all the plugins and that you certify that will work, and I won’t get caught off guard with incompatibility like I would get with WordPress or whatever. I do trust that. I think that’s very valuable, especially in the enterprise world. They don’t have the time or energy or resources to go test 10,000 solutions. They want to be able to say, “Here are the four solutions that you certify with.” Just out of these four, I trust your opinion on these four will cover 80% of the use cases. I pick one of them that’s more suitable for me, because of budget, because of engineering resources, or whatnot.

Alex Saroyan (00:53:55): Yup. By the way, for the product itself that goes into customer premises, that’s the agent and SoftGate, those high-performance parts are developed using Go. DPDK accelerator is a C and C++, but we do use Python for some internal use. Our NRA engineers that run lot of simulations to test the product, and they use a lot of Python scripts. Python ecosystem seems to be really great for a situation where you need to do a lot of experiments, a lot of testing, and a lot of changes.

Eric Chou (00:54:54): Yeah, it’s crazy.

Alex Saroyan (00:54:54): I know that people make changes every day because every day they get new builds from developers with some new features, and they need to tweak their NRA testing part differently.

Eric Chou (00:55:08): Yeah. For sure. I think Python’s great for … I’m just kidding. Of course, you pick the best tool for the job. Python is never going to be as fast as C, it’s never going to be as fast as Go, but it has shown other strengths. Like you said, it’s quick to prototype. It has a good ecosystem. Obviously, it’s not a popularity contest, but being popular means the bug gets fixed quicker, gets discovered quicker, and so on. Those are its strength. Definitely. You mentioned you’re a software play and these are all shipped over in different parts, whether it’s the agent, whether it’s SoftGate, or whether it’s the controller.

Eric Chou (00:55:49): How customizable or how expandable, if I find something that’s not within the current controller or if I wanted to add some features to the agent, what are some of the ways that network developers that just say could interact and could enhance that feature and maybe merge upstream back to your code?

Alex Saroyan (00:56:15): Our controller API is fully open and normally documented. Some of our customers, build their own product. They want to embed Netris into a bigger product that provides some private or public cloud services. They consume our API, but we run the switches and SoftGate nodes. SoftGate and Switch Agent software is currently closed source, but we are planning to open source API on that side too in the future.

Eric Chou (00:57:04): Oh, no kidding. Okay.

Alex Saroyan (00:57:06): Yeah. There is some work that we need to finish before it’s good for open sourcing, but eventually, we’ll get there. We want people to be able to make little tweaks into Agents too.

Eric Chou (00:57:21): Nice. You heard it here first. Alex has just promised. No, I’m just kidding. No, I’ll settle for APIs. I don’t even care whether, at this point, whether it’s open source or not, I just want to be able to say these are the stuff I want to process externally. I can still somehow communicate with either the controller or SoftGate or the Agent. But like you said, you did mention that it’s an API first, right? Whatever you talk to the controller, it’s the same thing that the front-end talk to it. There’s no backdoor for the controller to access the database or whatnot.

Eric Chou (00:58:08): Everything is API first, so I think that’s great. That’s how modern tools have become. It’s always API parody. There’s no backdoor. I think back to what you were saying about the public cloud really changed that. Do you think, I don’t know, do you think EC2 has some backdoor to manage SQL? No. Each team stands out itself within Amazon and each of them creates, talks to each other through the APIs and that’s how they were able to roll out services so quickly. There are no back doors and everything’s API first. Cool, man. I like that very much.

Alex Saroyan (00:58:52): Yeah. It’s cool that you brought up this example specifically because we’re currently working on compute and network interoperability API. We’re developing that API in a way that it will be standard. It will not require any back doors. Currently, right now, as a prototype, we’re doing this for just one compute provider. But in the future, the design of API will be the way that any compute platform, be that VMware or be that Red Hat OpenShift, or Rancher, whatever it is, they will be able to plug into that API.

Alex Saroyan (00:59:52): Because the goal is to make switch ports disappear for DevOps. Remember in my example that in the public cloud, a DevOps engineer creates this VPC and throws a bunch of virtual machines on it. We need to replicate exactly the same experience on-premises. But today, even though we make it incredibly simple, DevOps engineers still need to select from the list of switch ports that network engineers allow them to. That’s super simple, it’s just three clicks. But we want to get rid of that too. How we envision it be, that there will be the process where network engineer, where DevOps engineer will create new VPC.

Alex Saroyan (01:00:47): That new VPC will automatically show up in your computing environment, like your VMware or your Rancher, or whatever it is. When you open networking there, you will see the network that you have created in Netris. When you request your virtual machines, Netris will automatically understand behind which switch ports these machines happen to be connected, and will dynamically provision the underlying physical network for that.

The Two Pizza Team

Eric Chou (01:01:20): Nice, nice. That’s pretty ambitious because I think the difference between AWS and on-prem is AWS abstract all the way. You don’t even know what the switch is, but on-prem, you actually know. It’s a little bit harder for you to say, “I want to duplicate that experience completely.” It’s different, but I like that goal. It’s audacious and it’s a pretty strong goal to shoot toward, definitely. Also, by the way, don’t take my word for it, like API first in AWS. Go back to, I believe it was 2003, when Werner Vogels from Amazon actually came in.

Eric Chou (01:02:05): There were a bunch of articles about him talking about, he came into Amazon as a CTO and starts mandating API first as the way to do things internally. He had the full backing of Jeff Bazos. That’s why in 2006 they were able to launch S3 so quickly, and the year after, EC2, and boom, their 10,000 other services came after that. It’s because of that vision in 2003 that he came in and say API first. Don’t take my word for it. I’m just a messenger.

Alex Saroyan (01:02:40): No, that’s how you build your product in a modular way. You have a few small teams and each small team is responsible for one aspect. When there’s this API and containerized product, it’s so easy. Each small team can work as a small team. That’s important, and they get bigger. They say, what one engineer can do in one month, two engineers can do in two months.

Eric Chou (01:03:17): Then, the three will be five months. Because now they have to discuss design patterns and they have to get agreements and so on. But no, I see what you’re saying.

Alex Saroyan (01:03:31): There’s actually math research behind that and there’s a math formula for how you can calculate that complexity.

Eric Chou (01:03:43): Pretty fascinating, isn’t it?

Alex Saroyan (01:03:46): Yeah. You want to preserve, you want to stay nimble.

Eric Chou (01:03:51): Yeah. Yeah. I don’t mean to just be a spew on AWS. But they do have this thing called the pizza team, the one pizza team. It’s like, your team needs to be small enough that you could feed everybody with one pizza. But of course, this is just a goal. Pretty soon, even by the time I was there, it’s a two pizza teams. There’s just no way. Do you want to just make that person die or what? There’s just no way, but it’s two pizza teams and it’s just an idea.

Eric Chou (01:04:30): That team, it should be small and nimble enough, like what you said, Alex, that they were able to reach things fast and try things out fast and potentially fail fast and fell forward.

Alex Saroyan (01:04:41): Yeah. Actually, we found that peer reviews and continuous feedback is a critically important. Through trial, error, and learning currently, we like to keep each of our teams size of between two to five people.

Eric Chou (01:05:03): That’s still a one pizza team unless you have somebody who’s a big eater. Like me, I’m a big eater.

Alex Saroyan (01:05:13): Yeah. That way we try to keep groups of people that are between two to five. If one team grows bigger than five, we usually separate them into two functional groups. We have this culture of feedback and transparency. When someone does something or plans something, we always ask each other for feedback, for feedback, for peer review. Kind of like open source development culture is. That helps a lot.

Eric Chou (01:06:05): Yeah. I agree. I think it’s good to have that feedback loop and even sometimes being brutally honest. If you can’t be honest internally, then the first thing you do is would you rather fall in your face in front of the customer or do you want to have some friendly conversation between colleagues, even if it hurts? Yeah. Alex, one burning question I have is your domain as netris.ai. By the way, we try to register for some AI domain. That’s actually a, I think is British colony top-level domain.

Eric Chou (01:06:42): All of them are super expensive, but anyways, what’s the AI bit for Netris? Is that going in the future or do you actually have some artificial intelligence within your software?

Alex Saroyan (01:06:57): We don’t have any deep learning technology.

Eric Chou (01:07:01): Sure. Thank you for being honest. A lot of people would just start BS-ing, but thanks for being honest.

Alex Saroyan (01:07:08): Yeah. No, our algorithms are developed by humans.

Eric Chou (01:07:14): Nice.

Alex Saroyan (01:07:16): Tested by software that other humans develop.

Eric Chou (01:07:20): Created, sure.

Alex Saroyan (01:07:22): Yeah. I think the idea of AI here is more of the algorithm that we have created is taking away this stupid repetitive part of the work that no one really likes. I think about people like creative creatures and wasting creative creatures’ time on repetitive things, that’s not good. It feels wrong. That’s what AI does. It takes away the boring part.

Eric Chou (01:08:04): Got it. Got it. No, I buy that. It’s like, I don’t know, “Hey, Alfred, go brew me some tea. Go make me sandwiches, because I need to focus on, I don’t know, solving world hunger.” The AI part is the robotics that handles your repetitive test, so to free you up to do more creative work as you’re meant to be doing.

Alex Saroyan (01:08:29): Yeah, and the way our agent works, the intelligence to configure network devices is not in the controller. It’s in the Agent. Every agent works independently. Every switch has an agent, every SoftGate router gateway has an agent, and they communicate with the controller, but they are not allowed to communicate with each other because we want to have a stateless system.

Eric Chou (01:09:04): Got it, interesting.

Alex Saroyan (01:09:06): To make it stable, we wish it is stateless. They’re not allowed to communicate directly. They need to learn all their information from the controller and the information that we store in the controller doesn’t keep implementation-specific data. It’s only either user-defined requirements or metadata coming from applications. Our agents, when the agent boots up, the agent has this understanding of the environment like, “Oh, it seems like I just boot up in a switch or I just boot up in SoftGate. Okay. It seems like I’m running this version of Linux. It seems like I have DPDK acceleration enabled.

Alex Saroyan (01:09:57): Okay, then I need to install this. I need to configure this, that, and that.” Technically, it’s a lot of if else type logic, a lot of algorithms like that, a lot of math, no deep learning, but there is this notion of understanding of what environment do I live [in].

Eric Chou (01:10:26): Right. Yeah. I was just playing. Of course, it’s smart. It’s no algorithm in terms of, I don’t know, natural language processing, but it is a self-sustained brain that you boot up and you’re able to be self-aware and start processing information based on your environment. That is intelligence by itself.

Alex Saroyan (01:10:53): Yeah.

How to Try Netris

Eric Chou (01:10:55): Let me ask this. We talked about Netris, and we talked about all the agents, so how can people get started with Netris? Yeah, sorry to cut you off guard there.

Alex Saroyan (01:11:13): You mean how to test, how to learn?

Eric Chou (01:11:16): Yeah. If people are interested in trying it out, how do people get started with the Netris system? Is there a trial? Is there a way to get their hands dirty? What are the ways that they could get started?

Alex Saroyan (01:11:34): That’s a great question. When I was working as a network engineer, I remember that every time that our management would decide to buy new technology, or if I would decide to test one new technology, there were always these challenges that if it involves hardware, you need to register with the vendor. You need to wait until–

Eric Chou (01:12:05): Buy a license.

Alex Saroyan (01:12:07): Yeah. Or, get a license. We decided to make this testing simple. On our website, there’s this button Try Now. When you click Try Now, it shows you two options. If you happen to have an environment, switches, and machines that you can use as a SoftGate, you can install Netris on-prem for free. We’re not concerned of that. That’s okay. People can use it for free. That’s no problem with that. Or, if you don’t have an environment, maybe you’re in the public cloud and you’re thinking about greenfield deployment. You don’t have hardware yet, or maybe you have a data center, but you don’t have all the time to put together that physical app.

Alex Saroyan (01:13:04): We have created a lab environment. We call them a sandbox. Sandbox has six switches, and two SoftGate nodes, it has internet connectivity with the upstream providers with IPV4 and IPV6. It has a bunch of Linux machines that you can load applications on them and test. It has a pre-installed Kubernetes cluster, where again, you can load applications and test. From the website, you just fill in this form, your email, and your name, and we reply. We send a reply email with credentials and documentation. So you get access to the sandbox in one minute. Then, you can try everything with the documentation.

Alex Saroyan (01:14:03): We also have a Slack community. People join our Slack channel, where they can speak with solution architects, but also they can speak with the developers. They can speak with other users too. We are seeing sometimes people are like, “Hey.” Two different users asking each other some questions, like what switches worked better for you, what cards you use? Things like that. Or, sometimes there’s a very, let’s say, a very Kubernetes specific question, and our architect who knows ins and outs of Kubernetes, very deep level may answer this person immediately. We’re trying to create that nice community of professionals within Netris.

Eric Chou (01:15:01): Nice, nice. That’s very frictionless. If you just provide a sandbox, then you’re … I don’t know how much easier you could get. It’s just like, all you need is a browser and some ability to click your mouse. Yeah, no, that’s great. Yeah, I did notice that in all of your Netris.ai/videos link at the end, you always say, “Netris is easy. Here’s why you try us.” I like that. You must be trained well by your marketing person. It’s like, “Hey, Alex, every time you stop, you got to do this tagline to [inaudible 01:15:41] people’s memory on it.” It stuck in my mind.

Eric Chou (01:15:44): I watched four videos in a row, and by the way, I think your team is very Kubernetes, but we didn’t get too much into it, but it’s deep knowledge. It’s obvious that you’re very Kubernetes-focused. I didn’t even know Calico CNI could actually establish BGP directly. Yeah, definitely check out Netris. Join the Slack community, and try it out. Where can people find you on social? Obviously, you have your Twitter handle up there and where else? LinkedIn, maybe?

Alex Saroyan (01:16:19): Yeah. I’m active on Twitter, LinkedIn too, and Slack, always on our Slack.

Eric Chou (01:16:30): Yeah. Nice. Very cool. We’ll provide all the links within the show notes, of course. Obviously, you could find all that try information on Netris’ homepage, which is just N-E-T-R-I-S.ai. Hey, Alex, I think we’re coming up a little over the time, but it’s been a very, very interesting conversation. I am surprised at how much detail you dive into on the Netris, the tech stack. It’s always more interesting to me how people came to hear. One thing I wanted to know more about but didn’t have time to is your business experience.

Eric Chou (01:17:13): Obviously, you raised some funds and seed money and series A, and now you’re trying to expand. That’s the area, I think, set for another episode if you’re willing to share some of that as well.

Alex Saroyan (01:17:27): Sure. Looking forward to it, and I very much enjoyed this conversation. It came across like a casual conversation. Sure. I’m looking forward to discussing this furthermore.

Eric Chou (01:17:49): Yeah, absolutely. I don’t know. I didn’t even, without that red light, I don’t even think I was recording. It’s just like two nerds trying to discuss and talk about technology.

Alex Saroyan (01:18:01): Yup.

Eric Chou (01:18:01): Thank you again for being here. I appreciate your time.

Alex Saroyan (01:18:04): Thank you so much.

Eric Chou (01:18:06): Thanks for listening to the Network Automation Nerds Podcast today. Find us on Apple podcast, Google podcast, Spotify, and all the other podcast platforms. Until next time. Bye-bye.